AI: The Commander and the lie detector

Written by Dr Peter Roberts for Aureliusthinking.com and reproduced with his kind permission.

It seems likely that AI will provide the military commander with more options, advice, and courses of action (COAs) in the near future. That should be good news.

Smarter AI systems should also become more nuanced; rather than just examining topography, geography, correlation of forces, resource availability, time and space, command arrangements, optimal synchronisation, and intent, good systems will also look at how the enemy is fighting. That examination of tactics – updated day-by-day as fighting styles adapt to the fight they are in – will impact on the COA that a commander will select.

That’s all-good news and senior military personnel seem to be getting rather excited about it, as do the journalists covering it. What is less sure is the data that sits underneath the AI coding: how clean is it? How reliable? Has it been verified? Has it been validated? What’s its veracity? Can it be ingested into the model and the coding applied? Has it been weighted properly? Is it relevant to the current fight, or the next one?

Dr Peter Roberts

Dr Peter Roberts is the host of the 'Command and Control' podcast. He is both a Royal Navy veteran and the former Director of Military Sciences at the Royal United Services Institute.

In addition, he is a regular global commentator and advisor on military affairs.

Data in = data out

These questions about the source and veracity of the data are part of the role of an organisations Chief Technology Officer (CTO, often synonymous with the J6 specialisation). Beyond the considerations of technical utility and functionality, determining the relative merits and applicability, the weighting of the data and choice over models of ingested data are a commander’s job.

If those factors are poorly conceived, the results will be equally poor (perhaps disastrously so). Conversely, well selected, crafted, curated and honed data, pushed through the right model, will have relevance to the moment and the potential to be extremely useful. That means this vital role is one that the commander must engage in personally. And, as it stands today, there are only a handful commanders across the world’s military forces who do so (interestingly, these people are also the ones who seem to be less preoccupied with the mantras of ‘speed’, ‘faster’, and ‘pace’).

In these contexts, data is not the CTOs job, it is the commanders. If the commander fails to determine which data sets, models and weighting should be used for that specific fight, at that specific moment, the suggestions coming from the AI system will be worse than sub-optimal.

The possibility of false information

As we have seen in numerous system tests with ML and AI streams (including Large Language Models), when the system is unsure it does not provide a level of certainty about recommendations and answers; instead, it simply lies (something that has become known as AI bullshitting – an evolution on the ‘hallucination’ taxonomy previously used[1]).

Indeed, the idea that AI models weigh evidence and argue on the basis of finding ‘truth’ is false. LLMs are inherently more concerned with sounding truthful than delivering a factually correct response. Where decisions have lesser importance, this poor advice can have significant consequences in terms of financial or reputational damage. In some circumstances, it could even lead to damage and physical injury. For war fighting commanders, decisions guided by AI under false premises are likely to result in death and destruction.

Information verification

This is important – clearly – but it does not mean than military commanders need to develop detailed system and data knowledge. There is insufficient time for these people to add that specialist skill into the already heady education and training agenda required of them.

Rather, commanders need to be given technical/technological confidence; something that will enable them to understand and articulate their needs and be assured that their C2 systems are working off useful data and not something trawled from an irrelevant fight: in essence they will need to have a bullshit detector dealing with AI systems and models.

This will be a significant issue in the near future. Most Western commanders see themselves as ‘big picture’ people, leaving the detail and the ‘weeds’ to both their headquarters staff and subordinates. Adding a smattering of ‘mission command’ delegation and a bit of junior empowerment makes everyone feel secure in their decisions. Yet Western commanders have shown little willingness (or ability) to involve themselves in process detail that sets the critical intellectual conditions for battles.

For example, it is rare to see a commander do more than cast an eye over his ‘personal’ CCIRs (Commanders Critical Information Requirements). Usually this is left up to staffs who nominate a few items for rubber stamping. Few commanders really ever nominate CCIRs themselves.

Information verification

This is important – clearly – but it does not mean than military commanders need to develop detailed system and data knowledge. There is insufficient time for these people to add that specialist skill into the already heady education and training agenda required of them.

Rather, commanders need to be given technical/technological confidence; something that will enable them to understand and articulate their needs and be assured that their C2 systems are working off useful data and not something trawled from an irrelevant fight: in essence they will need to have a bullshit detector dealing with AI systems and models.

This will be a significant issue in the near future. Most Western commanders see themselves as ‘big picture’ people, leaving the detail and the ‘weeds’ to both their headquarters staff and subordinates. Adding a smattering of ‘mission command’ delegation and a bit of junior empowerment makes everyone feel secure in their decisions. Yet Western commanders have shown little willingness (or ability) to involve themselves in process detail that sets the critical intellectual conditions for battles.

For example, it is rare to see a commander do more than cast an eye over his ‘personal’ CCIRs (Commanders Critical Information Requirements). Usually this is left up to staffs who nominate a few items for rubber stamping. Few commanders really ever nominate CCIRs themselves.

Understanding the system - and playing to the advantage

The corollary to this is understanding what data an adversary is relying or dependent on, and the opportunities of exploiting those feeds to distract, discombobulate, confuse, or divert attention. Indeed, by understanding how an adversary AI system works, it may well be possible to assist them in making poor decisions by amplifying certain information and data feeds on which their systems place heavy emphasis.

This is simply a digitisation of a much older practice of disinformation and counter command and control operations. Whilst Western forces have previously been rather good at this, a more recent focus on the prospects of emerging and disruptive technology as an end in itself has not been helpful in bringing Western commanders and their forces success.

Simply put, whilst military personnel at all levels (and from everywhere) might be very excited about what AI can do for them, they are doing little for it (except provide [hot] air time). The effects of this neglect are likely to be catastrophic for the careers of military commanders. They will have more deadly consequences for soldiers, sailors, marines, and aviators, and the nation state.

[1] Hicks, M.T., Humphries, J. & Slater, J. ChatGPT is bullshit. Ethics Inf Technol 26, 38 (2024). https://doi.org/10.1007/s10676-024-09775-5

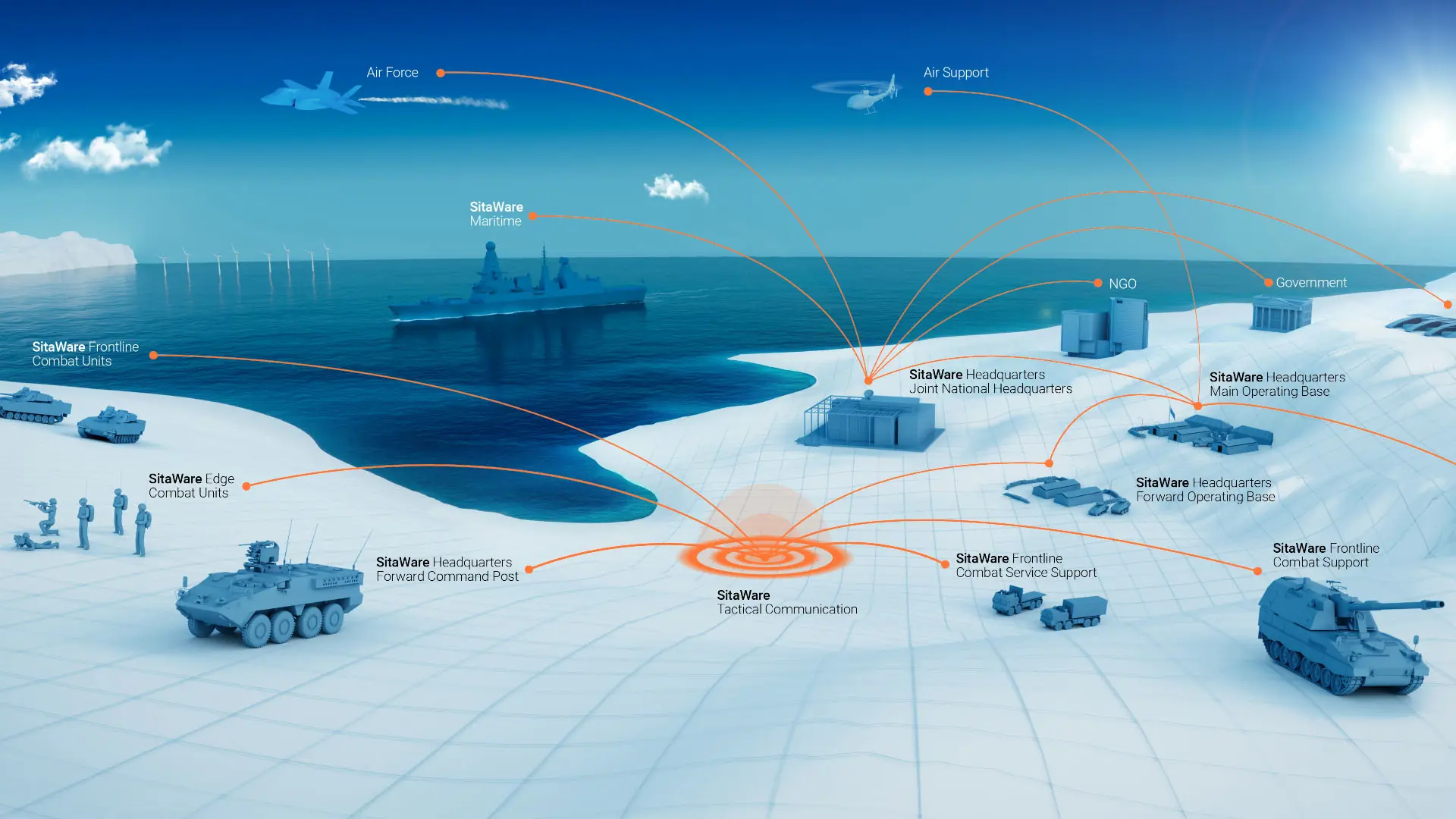

The 'Command and Control' podcast, hosted by Dr Peter Roberts and sponsored by Systematic, explores how our understanding of C2 needs to flex with modern requirements. It includes high-profile guests from NATO, Netherlands, UK, US, and Australian Armed Forces as well as thinktanks and industry experts to debate hot topics such as AI and multi-domain integration.

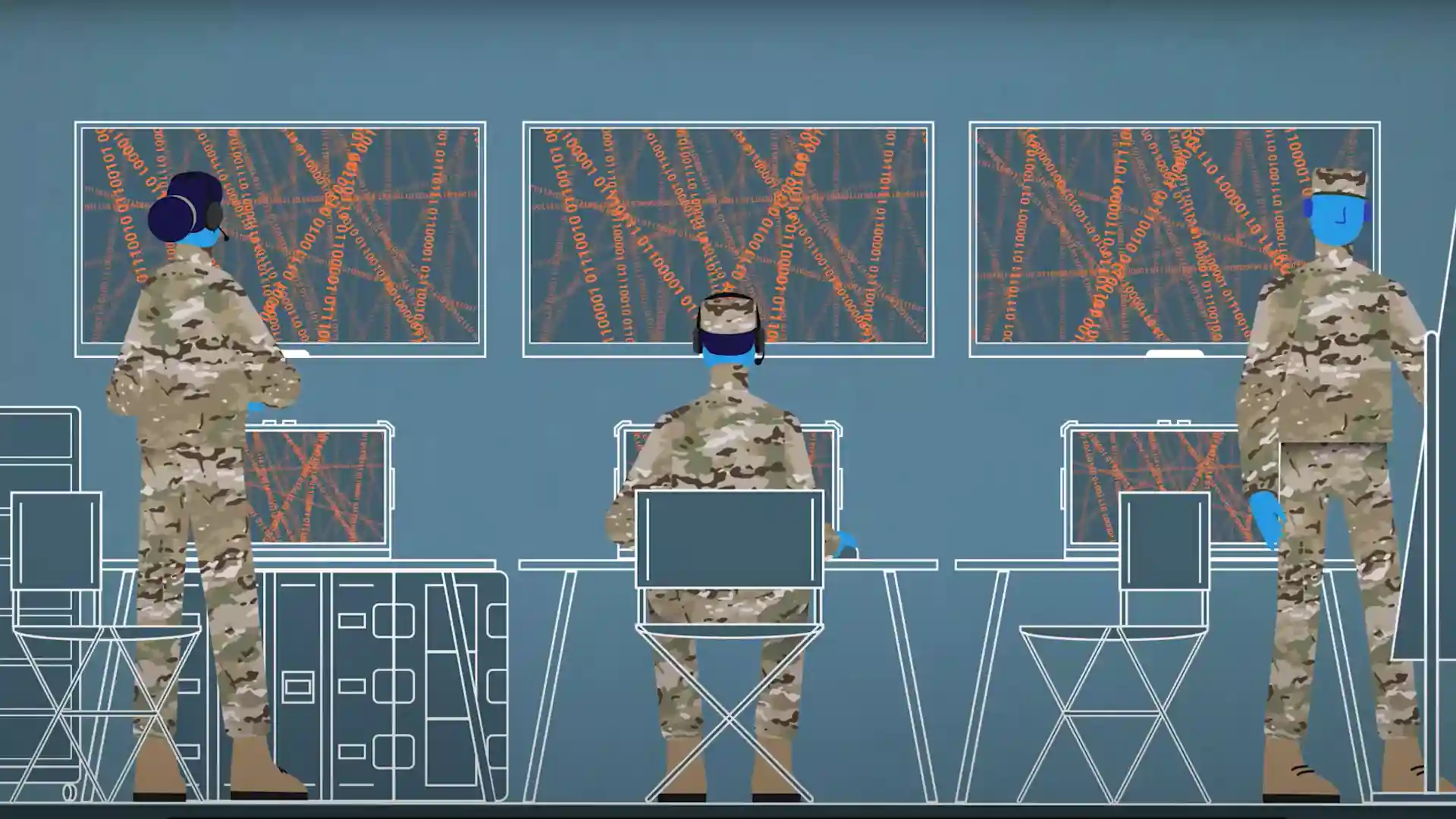

Video: SitaWare Insight explained

Leveraging Artificial Intelligence tools like Natural Language Processing, Computer Vision, and Anomaly Detection, SitaWare Insight rapidly uncovers and delivers critical intelligence to support your troops, as well as across joint, allied, and friendly forces. Click on the animation below to learn more about SitaWare Insight.

Explore more blog posts

Newsletter

Cannot see the form?

Your privacy or security settings may be blocking the form.